Supercomputers

█ BRIAN HOYLE

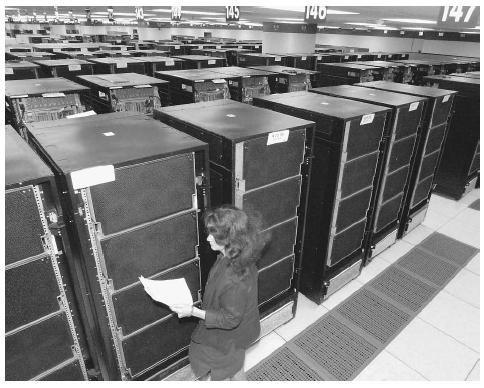

A supercomputer is a powerful computer that possesses the capacity to store and process far more information than is possible using a conventional personal computer.

An illustrative comparison can be made between the hard drive capacity of a personal computer and a super-computer. Hard drive capacity is measured in terms of gigabytes. A gigabyte is one billion bytes. A byte is a unit of data that is eight binary digits (i.e., 0's and 1's) long; this is enough data to represent a number, letter, or a typographic symbol. Premium personal computers have a hard drive that is capable of storing on the order of 30 gigabytes of information. In contrast, a supercomputer has a capacity of 200 to 300 gigabytes or more.

Another useful comparison between supercomputers and personal computers is in the number of processors in each machine. A processor is the circuitry responsible for handling the instructions that drive a computer. Personal computers have a single processor. The largest supercomputers have thousands of processors.

This enormous computation power makes supercomputers capable of handling large amounts of data and processing information extremely quickly. For example, in April 2002, a Japanese supercomputer that contains 5,104 processors established a calculation speed record of 35,600 gigaflops (a gigaflop is one billion mathematical calculations per second). This exceeded the old record that was held by the ASCI White-Pacific supercomputer located at the Lawrence Livermore National Laboratory in Berkeley, California. The Livermore supercomputer, which is equipped with over 7,000 processors, achieves 7,226 gigaflops.

These speeds are a far cry from the first successful supercomputer, the Sage System CDC 6600, which was designed by Seymour Cray (founder of the Cray Corporation) in 1964. His computer had a speed of 9 megaflops, thousands of times slower than the present day versions. Still, at that time, the CDC 6600 was an impressive advance in computer technology.

Beginning around 1995, another approach to designing supercomputers appeared. In grid computing, thousands of individual computers are networked together, even via the Internet. The combined computational power can exceed that of the all-in-one supercomputer at far less cost. In the grid approach, a problem can be broken down into components, and the components can be parceled out to the various computers. As the component problems are solved, the solutions are pieced back together mathematically to generate the overall solution.

The phenomenally fast calculation speeds of the present day supercomputers essentially corresponds to "real time," meaning an event can be monitored or analyzed as it occurs. For example, a detailed weather map, which would take a personal computer several days to compile, can be complied on a supercomputer in just a few minutes.

Supercomputers like the Japanese version are built to model events such as climate change, global warming, and earthquake patterns. Increasingly, however, supercomputers are being used for security purposes such as the analysis of electronic transmissions (i.e., email, faxes, telephone calls) for codes. For example, a network of supercomputers and satellites that is called Echelon is used to monitor electronic communications in the United States, Canada, United Kingdom, Australia, and New Zealand. The stated purpose of Echelon is to combat terrorism and organized crime activities.

The next generation of supercomputers is under development. Three particularly promising technologies are being explored. The first of these is optical computing. Light is used instead of using electrons to carry information. Light moves much faster than an electron can, therefore the speed of transmission is greater.

The second technology is known as DNA computing. Here, recombining DNA in different sequences does calculations. The sequence(s) that are favored and persist represent the optimal solution. Solutions to problems can be deduced even before the problem has actually appeared.

The third technology is called quantum computing. Properties of atoms or nuclei, designated as quantum bits, or qubits, would be the computer's processor and memory. A quantum computer would be capable of doing a computation by working on many aspects of the problem at the same time, on many different numbers at once, then using these partial results to arrive at a single answer. For example, deciphering the correct code from a 400-digit number would take a supercomputer millions of years. However, a quantum computer that is about the size of a teacup could do the job in about a year.

█ FURTHER READING:

BOOKS:

Stork, David G. (ed) and Arthur C. Clarke. HAL's Legacy: 2001's Computer Dream and Reality. Boston: MIT Press, 1998.

ELECTRONIC:

Cray Corporation. "What Is a Supercomputer?" Supercomputing. 2002. < http://www.cray.com/supercomputing >(15 December 2002).

The History of Computing Foundation. "Introduction to Supercomputers." Supercomputers. October 13, 2002. < http://www.thocp.net/hardware/supercomputers.htm >(15 December 2002).

Comment about this article, ask questions, or add new information about this topic: